Johnny-Five is the key Javascript Node Module that we are using in this project. Essentially, it allows Javascript to talk to the Arduino using a standard protocol called the StandardFirmata. You can check the documentation in the link above - it bundles up all the types of inputs and outputs you would need in a very easy-to-use manner.

However, the one problem you'll find with working with Open Source packages is that they'll change on you without notice, quickly and quietly. Furthermore, these packages do not always have your application in mind, and thus will not necessarily have documentation to support what you want to do! This blog post will detail some problems we had while using this package - but of course I should state that it's still an absolutely AMAZING package and we wouldn't be here without it.

Multiple Boards

One of the things Johnny-Five does not have very good documentation about is supporting multiple boards in one Javascript script.

Here is the documentation they have; it is good for the application they have in mind, but not for our purposes. It seems that using the

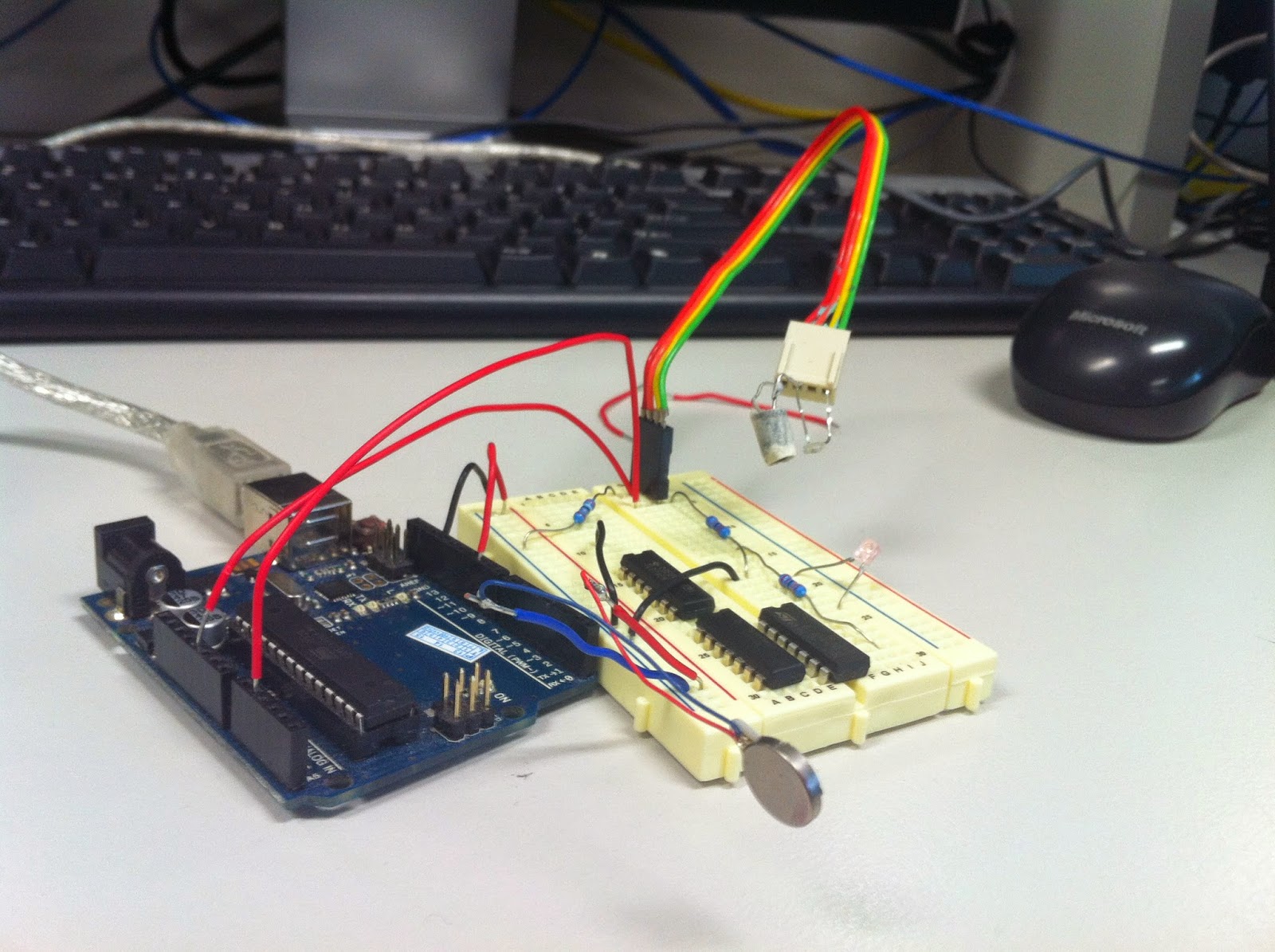

Boards object allows you to run the same code on BOTH the Arduino's, but not different code on each - which is what we'd like to do. The circuitry for control on the arm, and the IR sensors were on different boards. One uses an Arduino MEGA, one uses an Arduino UNO. (The vibration motors also use an Arduino UNO, but at this time we were just focussing on the 'server-side' system).

The problem was exacerbated by the fact that the laptops Ashan and I were using were different - a MAC and a Windows laptop. Thus, every time we tried to experiment with the same code, we'd have different results.

See below for the different ways you can go about making multiple boards according to the documentation

The first method is an automatic method of acquiring the devices. However, this doesn't guarantee you'll get the same one each time. We tried this, and it did not always assign the correct board.

The second method assigns the ports correctly, but because of the way the class is created in Johnny-Five, it does not allow you to control the boards separately. The third way outlined above is highly similar to the second way.

The actual solution that is MUCH easier is as follows:

1. Create different board objects

2. Make sure that the objects you create are assigned to the board

Each object that is created is given a 'board' property that relates to which board it is attached to. This feature is not in the actual documentation for each of these things - I just hoped that it would be an exposed property of the object, and it was!

Thus, our problem with the documentation was solved. We could use multiple boards in our Javascript programs!

IR Communication Issues

When we decided to try and use the server-client relationship to communicate between Arduino boards (more specifically, the IR sensors and the vibration motors), a problem arose.

Namely, the IR sensors were going WILD! Sometimes they would read a consistent value, but for a majority of the time, they would

wildly fluctuate between the actual value, and half the actual value. This is the weirdest behaviour I've ever seen, and I started troubleshooting the damn IR sensors.

- the connections were stable - I tested them individually with a separate Arduino and they were giving the right value

- the program itself was fine - I tested the IR sensors isolated on one computer, and the readings I was getting were fine.

- I tried it on both mine and Ashan's laptops, and it worked! It was only with a CLEAN INSTALL on the lab computer that it would fail! WHY!

This testing stage was the most infuriating part, as I could not isolate the problem. But I finally found the solution...

Checking the documentation of the johnny-five website, I found that only

recently had documentation changed in how you would access data from a sensor. The reason it was working on both our laptops but not a CLEAN INSTALL is that we had an older version of the package on our computers.

The top part of this code shows how you read from the sensor in an earlier version. The bottom part of this code shows you the NEW way to read from the sensor. This was not reflected as a change anywhere that I could find, and the documentation did not reflect that there had been any change at all! The code was just different - I thought I was going crazy!

This change was able to fix the bug. The lesson we learnt here is that using Open Source packages has it's detriments - namely, that whoever is maintaining the code may not be the clearest about their changes.

Damn it Johnny-Five...

U mad bro?

-1.jpg)